Introduction

A key objective of this project was to establish an industry quality Wwise project structure, utilizing consistent naming conventions, hierarchies and event behaviour construction. The official Audio Kinetic website provides ample information on the Wwise Authoring applications features and demonstrates example use cases for each view and layout. However, Audio Kinetic provides significantly less information on best practices and industry standard workflows for using Wwise. The video series by Cujo Sound “Setting up a AAA Wwise Project” (Jacobsen, 2023) provides this such information, as well as many other tips and tricks for optimizing workflows in Wwise.

Structure, Setup & Mixing

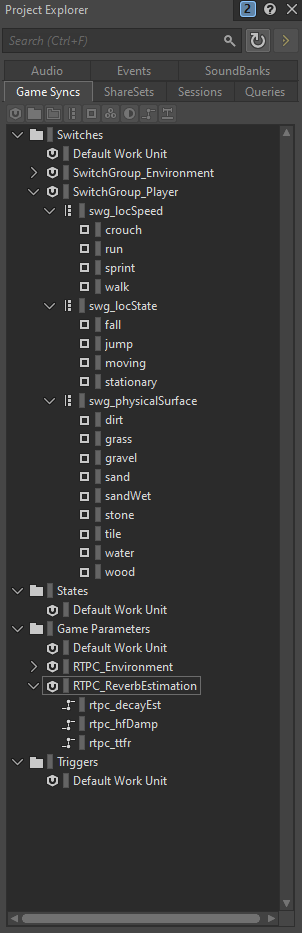

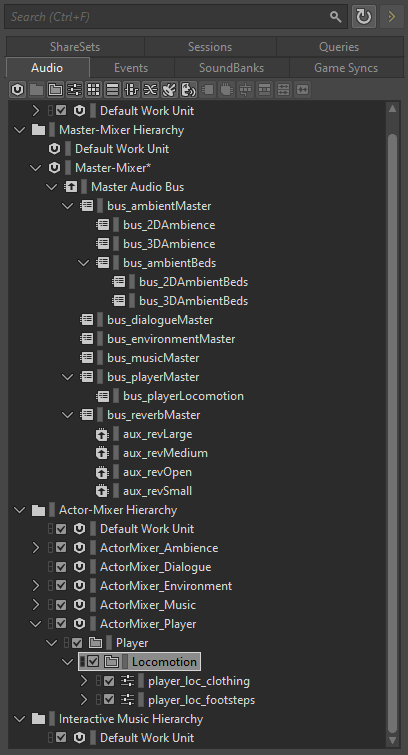

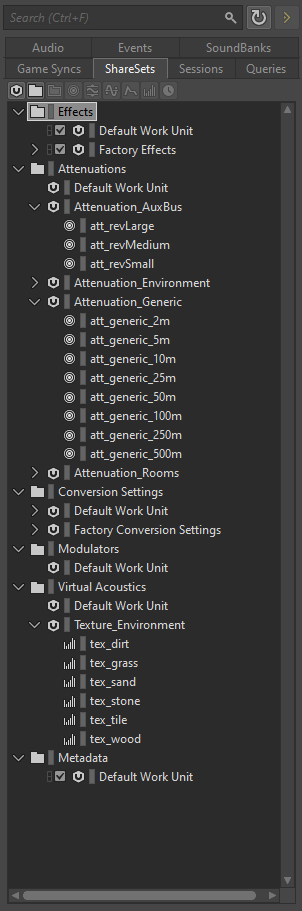

Before implementing any audio, I decided to create a “template” of sorts, based on the guidance provided by CujoSound, to serve as a framework for constructing my Wwise project. This required establishing a naming scheme, creating generic share sets for attenuation, game states, switches, RTPC’s and organising the project explorer with work units, folders and Actor-Mixer groups.

For naming conventions I settled on using Pascal-case for Work Units, Folder, Actor-Mixers and anything else used for organisation purposes. For all types of assets (Sound SFX, Switches, Attenuations, Aux buses etc.) I decided to use Camel-case. Additionally, for the majority of assets I opted to prefix with a three letter abbreviation, with some exceptions such as “rtpc” as I felt abbreviating to “rtp” would cause more confusion than it would solve.

Although not completely standard, I felt this convention suited my workflow the best, making navigation and organisation significantly easier.

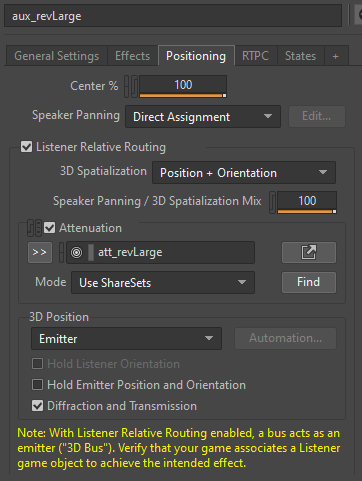

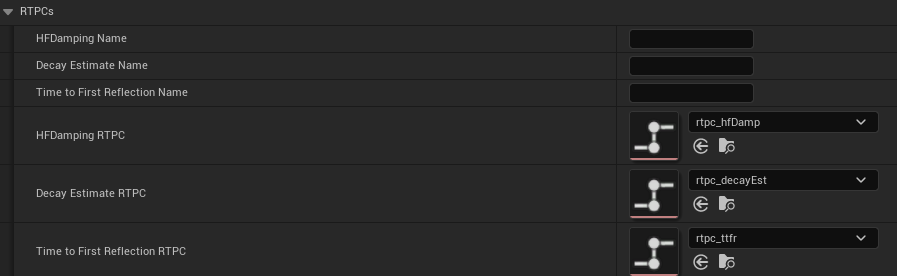

This, and the previous images display settings and assets all relevant to the reverb auxiliary buses, hereafter known as “Aux bus”, and their integration into the Wwise spatial audio pipeline. Aux buses are used to apply reverb to audio emitters, with “Use game defined Aux bus” enabled, located inside a Wwise Room or Reverb Volume.

Enabling “Listener relative routing” on an Aux bus and assigning an attenuation will cause that Aux bus to behave like an emitter, spatialized to the Room or Volume the Aux bus is associated with. This allows the reverberated audio to leverage the diffraction and transmission features of Wwise spatial audio, routing through portals the same as spatialized event emitters.

Reverb estimation is a feature of the Wwise integration that enables reverb parameters to be automatically tweaked to best suit the physical dimensions of the Room implementing the Aux bus. Estimation requires the RTPC’s to be assigned in the integration settings, and then behaviour to be setup inside Wwise authoring based on the RTPC values.

Audio Events

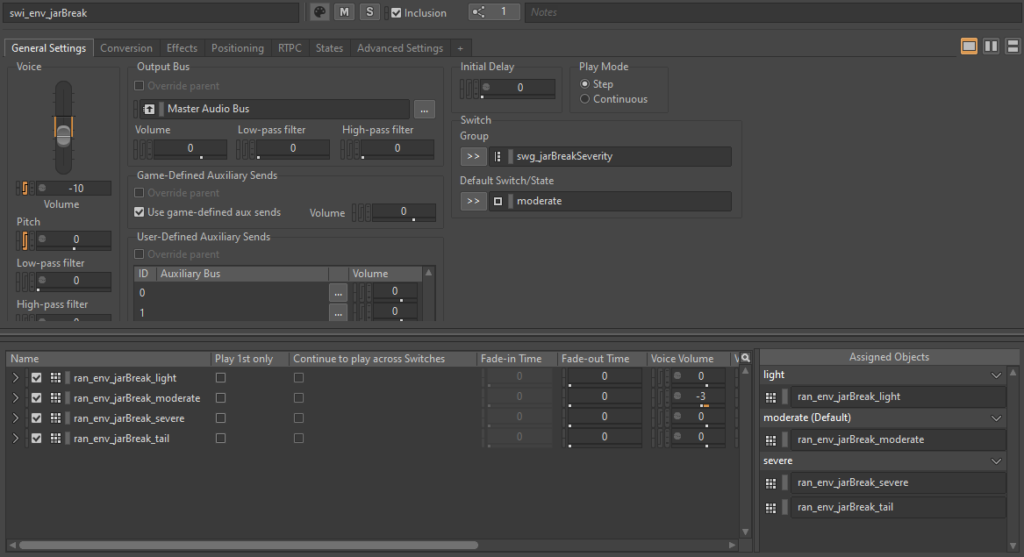

Jar break event

Creating the event for a physics interaction like ceramic jars breaking was a relatively straight-forward process. To create separation between both impact velocity and jar size, I’ve used a switch container with random containers to trigger sounds of varying intensity based on the switch value.

As well as the switch, pitch and volume for the switch container is automated by the same RTPC that determines the switch state. This automation along with the random containers provides both a significant amount of variation and progression between event triggers as the impact velocity is increased.

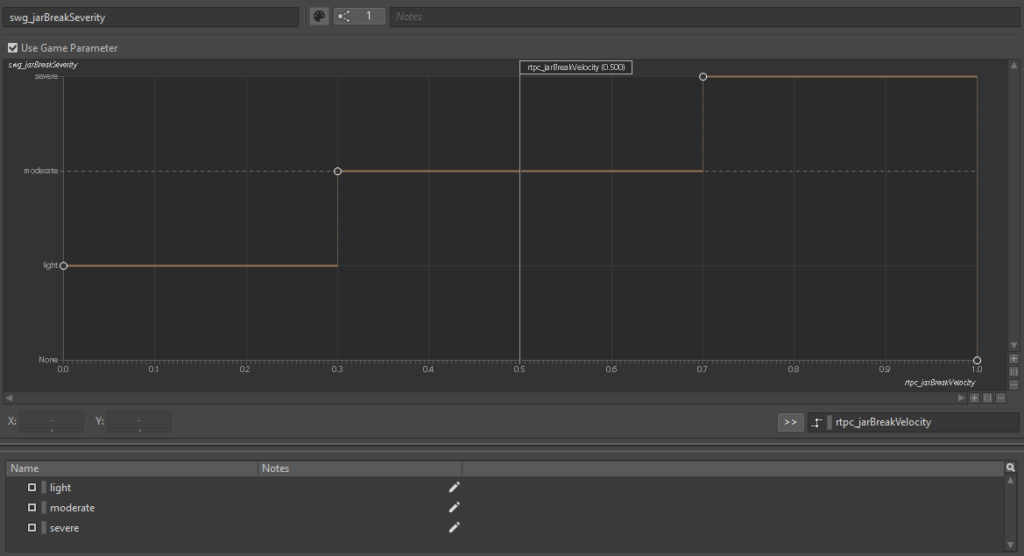

Rather than being set directly, the switch state for the jack break intensity is controlled by an RTPC in Wwise. This allows the break intensity to be controlled by a normalized value (0-1) rather than specify states manually. This approach is much better suited for working with floats and vectors, as the range of input can be significantly larger, and requires less interpretation through scripting.

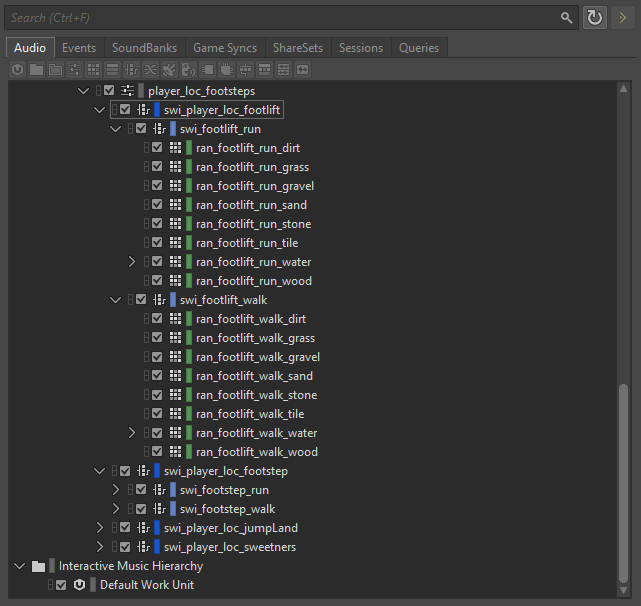

Player Locomotion

Player locomotion audio is a very common yet surprisingly complex system to set up. Assets variations need to be different enough to avoid repetition, whilst omitting any easiest identifiable tones or rhythmic gestures. Additionally, with many different surface types and locomotion speeds, the number of variations per asset needs to be kept as low as possible, to reduce the memory allocation required to post the event.

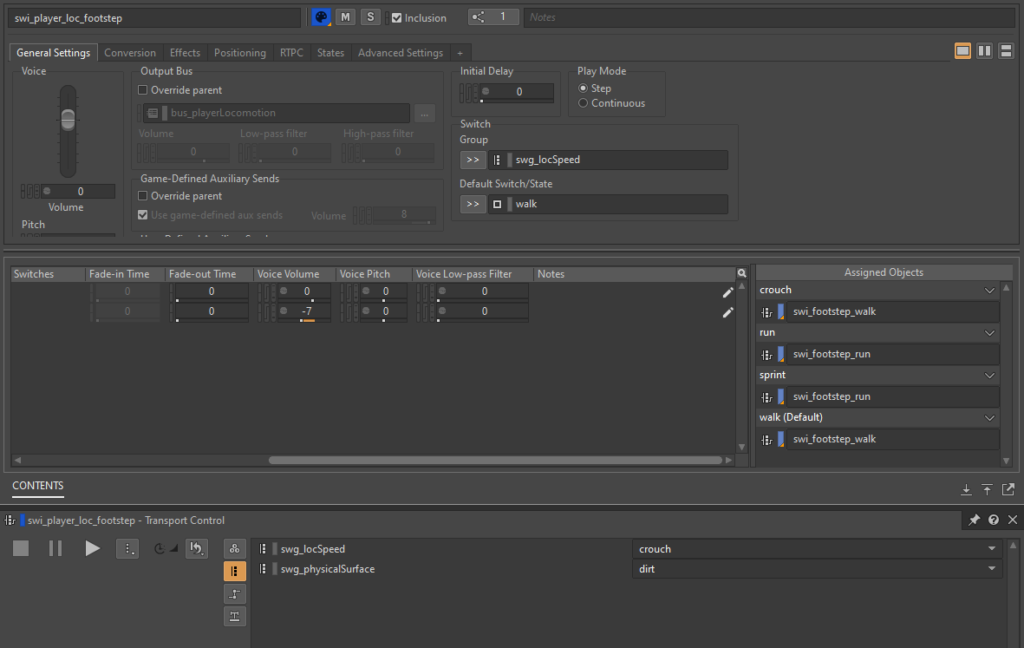

All physical surface dependent player locomotion events contain two layers of switch containers, speed and surface. The locomotion speeds I decided to implement were “Walk” and “Run”, as the player controller in the demo game did not have analogue control of their locomotion speed, simply running or not. Beneath this, each locomotion speed has their own set of random containers containing the footstep / foot-lift assets variations required to prevent repetition.

As there are only two locomotion speeds of assets, the “crouch” and “sprint” fields of the switch group were populated by the existing states. In the case of my implementation, this would not be possible, however if player locomotion were to be updated in the future, the locomotion audio system would continue to function correctly.