Introduction

This section of the devlog will go over the common audio systems used in game audio integration that I have created for this project (brace yourself, it’s a long one). I will be explaining and providing examples of both blueprints and C++ functions used to create this behaviour in-engine. The features I will be covering as the following:

- Footstep BP Notify

- Static Audio Emitter BP

- Spline Audio Emitter BP

- Jar Break Audio BP

- Wwise Rooms & Portals

- Static Audio Functions Library (C++)

- AkExtensions Static Function Library (C++)

Common Audio Systems

Game audio implementation often requires similar audio systems and functionality across many genres of game. In this section I will be going over a couple of these systems, explaining the solutions they provide as well as my process for creating them.

Footstep BP Notify

If a game character has feet, you are going to need footsteps. The way I prefer to approach implementing player locomotion sounds is through custom Animation Notify Blueprints (AN). AN’s are an effective way to add audio to animations, as they allow you to specify exactly where on the timeline you want audio to be posted.

Setting up custom Anim Notifies like this allow for parameters to be set for each instance of the Notify. This allows us to do this like customize ENUM values, assign different events, pass values to the notify function etc. Below, I’ve embedded the blueprint for this custom anim notify. In my project I used this as a parent class from which several variations derived.

As far as how this Blueprint functions, it boils down to a few important steps.

- Creating Ak Component (Wwise audio component that contains data required for posting audio)

- Determining surface type for footstep (see: C++ Functions)

- Setting audio parameters (surface type, locomotion speed, etc.)

- Posting the audio event

- Handling debug options

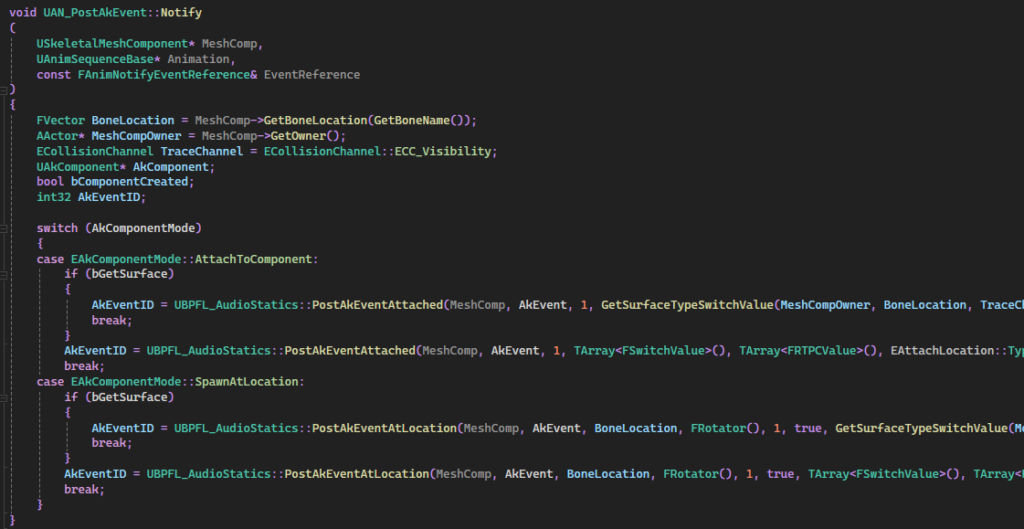

This blueprint notify works well and is a valid solution to footstep audio. However, I had originally planned to translate the blueprint into a more concise and inclusive CPP class. A partially complete override of the function “Notify” can be seen above, but unfortunately I was not able to complete this feature before the project deadline.

Determining the appropriate Ak Switch assets to pass through based on the physical surface proved more difficult than anticipated. When I am next approaching this issue, I will look into creating a subclass of “UDeveloperSettings” to create a UMAP between the ENUM”ESurfaceType” and “UAkSwitchValue” to be able to easily obtain a switch values based on the surface type returned by my function.

Audio Emitter BP’s

Static Emitter

The actor blueprint created for posting static audio events in a level was a simple process (once the associated C++ function had been created). The blueprint contains a collider and several variables pertaining to audio playback (AkEvent, Volume Offset, Audio Parameters etc.).

To keep the active voice count low, and to save on resources, audio is posted when a player enters the collider, and is stopped when the player exists the collider. The radius of the collider is set to the maximum attenuation distance of the associate Ak Audio event at actor construct.

This actor implements the functions “Post Ak Event Attached” and “Execute Action on Ak Game Object”. This C++ functions will be discussed further in this devlog post. Above, I’ve embedded the actor blueprint for reference.

Spline Emitter

All the audio playback functionality of this actor blueprint is practically the same as the previously discussed “Static Emitter” so I won’t go back over those aspects.

A very common problem faced in game audio is the representation of large areas of sound that are not localised to any one specific point in space, examples of this are rivers, treelines, oceans, and crowds. Applying a large attenuation to a single emitter is going to create unusual spatialisation as you move away from the audio source and the object it’s supposed to represent, and creating multiple audio emitters can introduce phase issues, excessive overhead and again, abnormal spatial behaviour

The solution to this issue is a “Spline”. Splines allow us to define a path along the perimeter of an audio emitting area, and with some maths to figure out the closest point along the spline to the player’s current location, a single audio emitter can be used to represent a large area of sound.

The above blueprint does exactly what I’ve described above. However, whilst developing this project, I encountered a significant performance issues caused by the combination of a moving emitter and the collider component I mentioned previously. Moving a large collider at runtime causes the overlapping actors to be updated with every movement, causing significant slow-downs.

Potential solutions for this issue could be adding a large, static collider that encompassed the max attenuation range, along the length of the spline, or tracking the player’s distance at an interval and comparing to the max attenuation of the event, to determine if the player is in earshot. These are both solutions I look forward to investigating in my future work.

Jar Break BP

Setting up the audio for physics based interactions like the Chaos destruction system can be a challenge. Luckily, Chaos provides many delegate events that can be bound to process the data created by Chaos interactions. In this case, the custom event “BreakJar” was bound to the “Chaos Break Event” providing access to data such as “Break Event Velocity” to be used to determine when audio should be played.

As potentially hundreds of break events can be called during chaos destruction, execution of any audio related nodes needed to be gated to prevent spamming. This was achieved with a “Do Once” node, that is reset after a certain time delay.

The velocity value passed into Wwise was calculated by getting a weighted average between the normalized values of both velocity and angular velocity. This was done to take into account the rotational velocity on impact which, as the listener would expect, should influence the intensity of the shattering sound.

Wwise Rooms & Portals, Diffraction & Transmission

The Wwise integration provides volume classed that can be added to a scene for calculation of the diffraction and transmission of sound through and around world geometry. Wwise room volumes were added to all interior areas in the scene, and portals were placed at all doors and windows. These volumes allow the Wwise spatial audio pipeline to dynamic apply filtering and attenuation based on the angle of diffraction through a portal from audio emitters to the listener

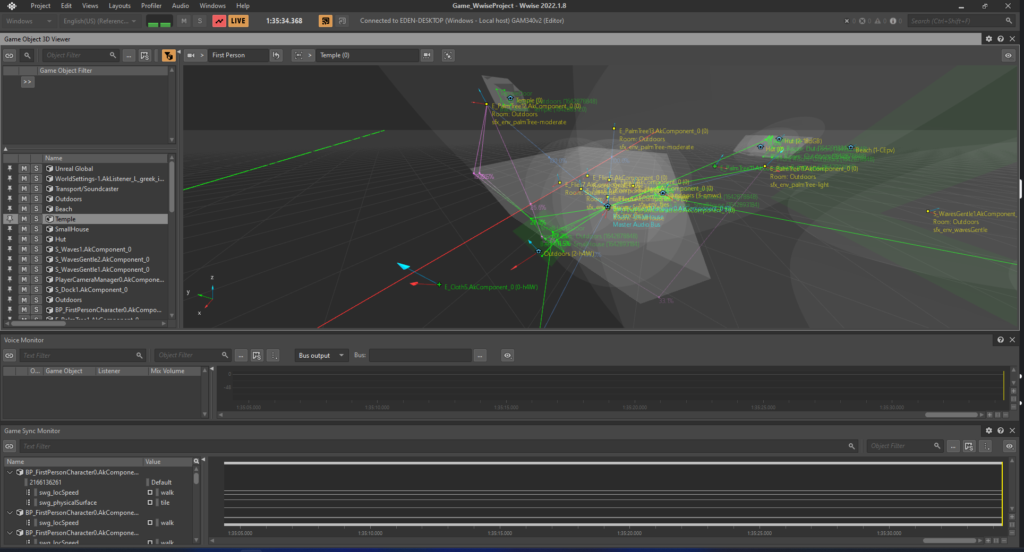

The view below is the Wwise Authoring “Game Object Profiler”. This view allows us to see all implemented spatial audio features in the level and their current states; allowing for fast debugging and setup when implementing rooms and portals in this scene.

In this example, the player is stood inside a small building, with the diffraction values being calculated for audio emitters outside the room.