Contents

Overview

This will be the first in a series of development logs that I will be creating throughout the production cycle of my third year Major Development Project. Over the next few months, I will be working as a part of a multidisciplinary team to create a cooperative space adventure game. Our chosen engine is Unreal Engine 5.1, and we will be using Wwise for audio implementation. My role within the team is “Technical Audio Designer”, my responsibilities include: Sound design & recording, Wwise implementation, C++ Audio scripting, Tool creation and engine assistance to programmers and artists.

While visuals and gameplay are essential elements of any game, it’s the audio that often brings the world to life. Over the course of this Devlog, I’ll outline four key considerations I have researched in the first two weeks of pre-production: UI Design Language, Reflecting Player/Environment Relationships for Two Players Simultaneously, Split-Screen Considerations & Applicable Conclusions, and Representing Player Movement Through Contradicting Environments.

Research Summary

UI Design Language

Ross Tregenza and Yuta Endo offer valuable insights into creating immersive UI experiences. Tregenza emphasizes three essential elements: flavour, feedback, and language. Flavour sets the mood, feedback provides tactile satisfaction, and language conveys critical information.

Endo discusses Animal Crossing’s satisfying sound design, focusing on the concept of “function meets joy.”

Key Takeaways:

- Balancing Function and Joy: Strive for a balance between functional and enjoyable UI sounds.

- Real Sounds: Incorporate real-world sounds for a more immersive player experience.

- Iterate and Test: Continuous testing and player feedback are vital for refining UI sounds.

- Consistency: Maintain a consistent style throughout the game for a cohesive audio experience.

- Player-Centric Approach: Consider the impact of UI sounds on player engagement and overall experience.

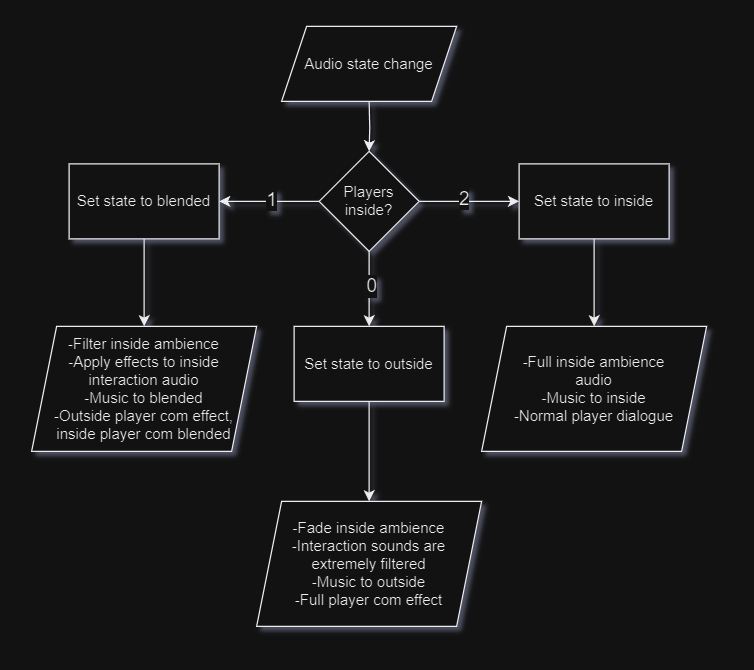

Reflecting Player/Environment Relationships for Two Players Simultaneously

Designing audio for transitioning environments, such as a spaceship and outer space, presents unique challenges. Maintaining clarity and distinction between these environments for two players concurrently is essential.

Key Solutions:

- Distinct Audio Filters: Apply different filters to simulate each environment’s characteristics.

- Volume and Panning: Use volume and panning techniques to differentiate between environments.

- Environmental Audio Cues: Include specific environmental cues for each setting.

- Music Transitions: Adapt in-game music to match the player’s location.

- Dynamic Effects: Utilize dynamic audio effects like echoes and muffling to enhance player immersion.

- Communication and Dialogue: Apply audio cues to represent communication challenges, like speaking through a spacesuit or intercom.

- Continuous Testing: Regularly test and refine audio design to maintain the intended experience.

Split-Screen Considerations & Applicable Conclusions

In “It Takes Two,” audio challenges for split-screen gameplay were addressed through technical and creative solutions. These insights can be adapted for stereo output to provide a more engaging co-op gaming experience.

Relevant Conclusions

In a non-split screen but fixed perspective co-op game, the audio spatialisation techniques used in “It Takes Two” can be adapted for standard stereo output to ensure wider peripheral support. The channel-based format (5.1 surround sound), which positions sounds based on their visual location on the screen, can be mixed down to stereo. This involves converting the multichannel audio into two channels – left and right – while preserving the spatial information.

The sounds originally intended for the rear and centre channels in a 5.1 setup are carefully blended into the left and right channels of the stereo mix. This ensures that players using stereo output still experience a sense of directionality and immersion, as the sounds they hear align with the visual cues on screen.

The concept of “spatial padding” can also be applied in this context. It decouples sound attenuation from panning, which can enhance the accuracy of the stereo mix. This is particularly useful in co-op games, where players often engage in actions together or are located close to each other in the game world. By preventing double output and maintaining clarity when both players experience a sound from a similar reference point, it can contribute to a more engaging and immersive co-op gaming experience.

Representing Player Movement Through Contradicting Environments

Designing audio for a game where the player can move from inside a spaceship to outer space presents a unique set of challenges. Here are a few of them, along with potential solutions:

Designing Separate Movement Sounds for Different Gravity Conditions

In a game where the player can move from inside a spaceship (with artificial gravity) to the vacuum of space (zero-gravity), the sounds associated with the player’s movements would likely change based on their environment. This is due to the differences in how a person perceives sound in those environments, the challenge is to balance both the players’ expectation of what things “should” sound like and the need for audio feedback on the player’s actions.

Inside the Spaceship: Here, we want to create sounds that reflect the player’s interaction with a solid surface. This could include footsteps, the rustling of clothing, or the clinking of equipment. These sounds would be very tactile and responsive, varying with the surface the player is interacting with.

In Space: In contrast, in the vacuum of space, there’s no medium for sound to travel through, and no gravity to cause impact sounds like footsteps. However, for gameplay purposes, we still need to include some sounds to indicate player movement. These could be muffled, low-frequency sounds that simulate what someone might hear through the vibrations in their spacesuit, as well as contextual sounds such as small suit mounted thrusters like used on a NASA Astronaut propulsion unit.

Wwise Implementation

As far as setting up the behaviour for this movement system, it will pretty much be the same as any ordinary switch container hierarchy; instead of switches for running and crouching, we will have “Gravity” and “No Gravity” which will contain their own switches for either surface material or perhaps propulsion intensity. The challenge lies with the audio design considerations discussed above.

Project setup and workflow tools

A significant workflow improvement for my team is the auto launch script I have previously discussed in a blog post. Due to the communal nature of the Games Academy, we often have to move around in work sessions and re-clone the project repository. This introduces a significant amount of compile time waiting for UE5 to load, my script automates this process to reduce manual workload and wasted time.

Details about this script can be found in my post: